Microsoft SwiftKey Keyboard

Type faster with Microsoft SwiftKey – the smart and customizable keyboard that learns your writing style.

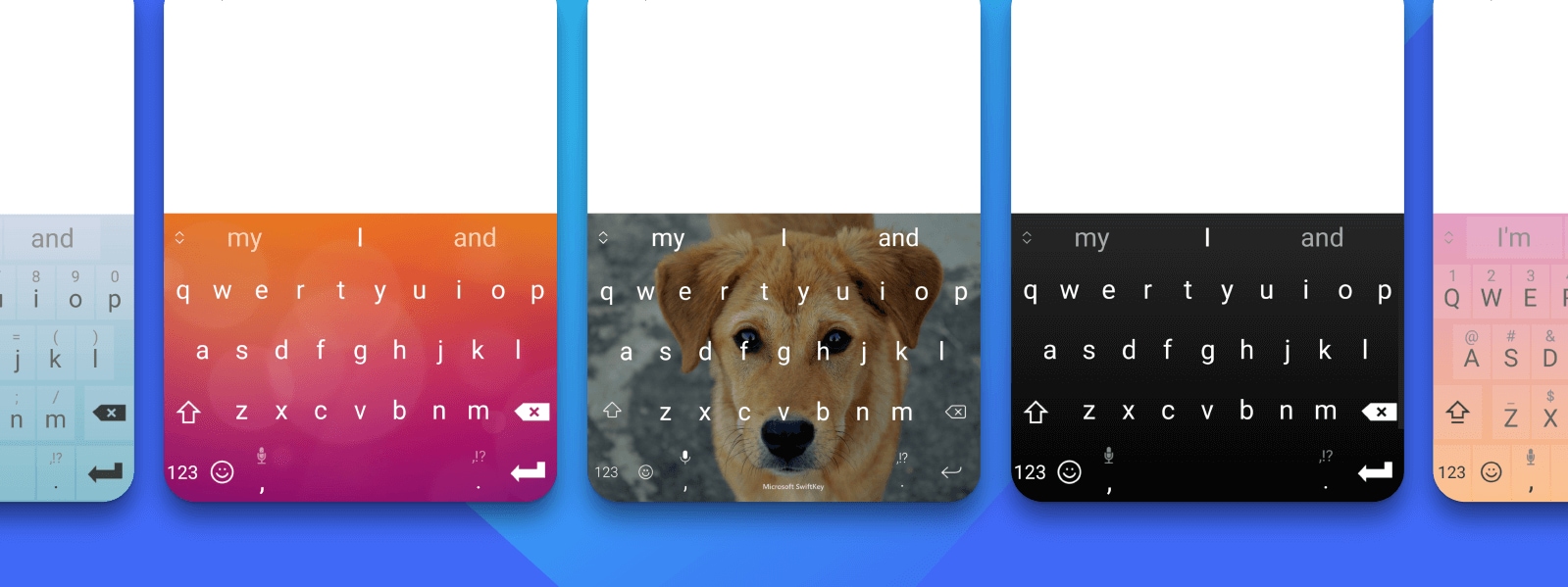

Make it yours

Choose from hundreds of free keyboard themes - or design your own.

Frustration-free typing

Microsoft SwiftKey is packed with features to make typing faster and easier.

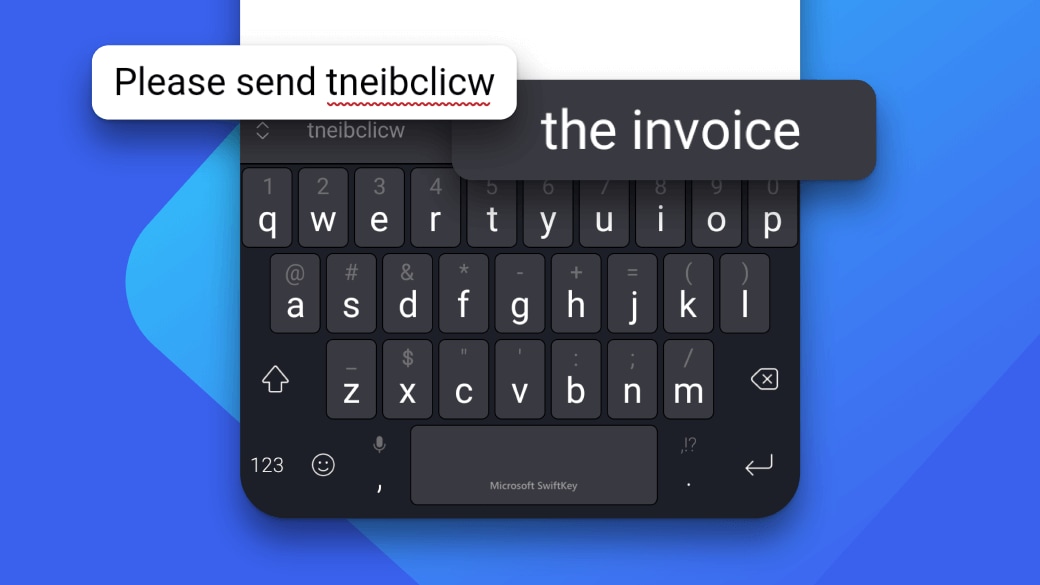

Fast and accurate

Say goodbye to typos. Microsoft SwiftKey spots your misspellings, missed spaces, and missed letters to correct them for you

Personalized typing

Customize the toolbar with your favorite typing tools at your fingertips, including GIFs, Clipboard, Translator, Stickers, and more

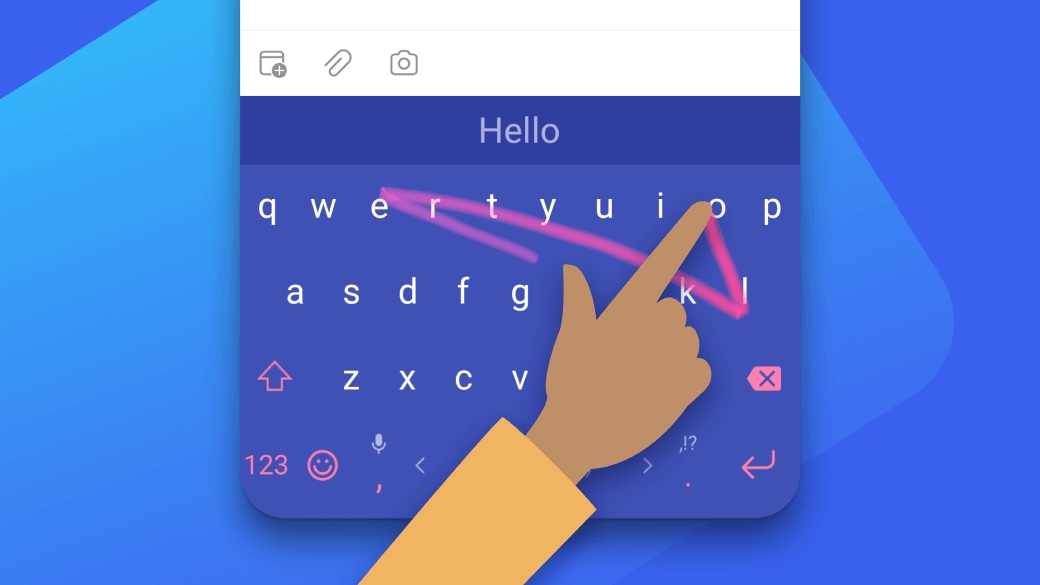

Type with a swipe

Tired of tapping? Easily slide from letter to letter with SwiftKey Flow

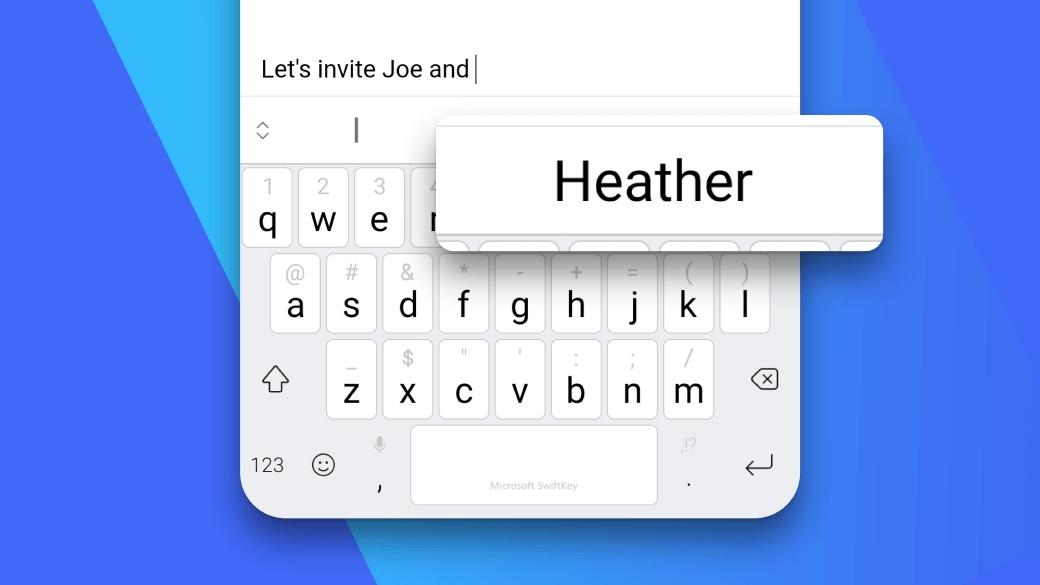

Learns from you

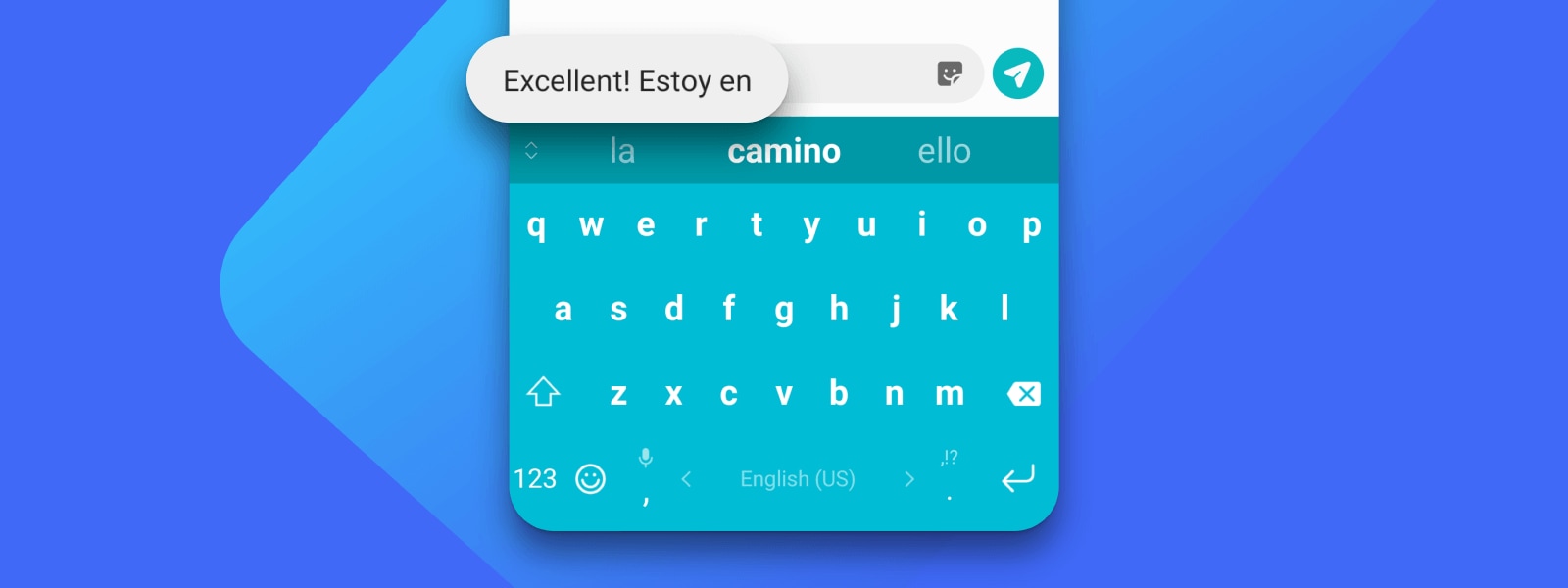

Microsoft SwiftKey learns your writing style to suggest your next word. Enter a whole word with a single tap, instead of typing letter by letter

Cloud clipboard

Sign in to copy and paste text between your phone and your Windows devices

Task capture

Sign in to save tasks right from your keyboard, and manage those tasks from Microsoft To Do

Type in five languages

Seamlessly type in up to five languages without switching settings. Choose from 700+ supported languages